Cancer patients are increasingly turning to large language models (LLMs) as a new form of internet search for medical information, making it critical to assess how well these models handle complex, personalized questions. However, current medical benchmarks focus on medical exams or consumer-searched questions and do not evaluate LLMs on real patient questions with detailed clinical contexts. In this paper, we first evaluate LLMs on cancer-related questions drawn from real patients, reviewed by three hematology oncology physicians. While responses are generally accurate, with GPT-4-Turbo scoring 4.13 out of 5, the models frequently fail to recognize or address false presuppositions in the questions-posing risks to safe medical decision-making. To study this limitation systematically, we introduce Cancer-Myth, an expert-verified adversarial dataset of 585 cancer-related questions with false presuppositions. On this benchmark, no frontier LLM — including GPT-4o, Gemini-1.5-Pro, and Claude-3.5-Sonnet — corrects these false presuppositions more than 30% of the time. Even advanced medical agentic methods do not prevent LLMs from ignoring false presuppositions. These findings expose a critical gap in the clinical reliability of LLMs and underscore the need for more robust safeguards in medical AI systems.

We selected 25 oncology-related questions from CancerCare's website, focusing specifically on treatment advice and side effects that require medical expertise. Three oncology physicians evaluated answers provided by three leading language models (GPT-4-Turbo, Gemini-1,5-Pro, Llama-3.1-405B) and human medical social workers, assessing each response at both the overall and paragraph levels. Frontier language models generally outperformed human social workers, though physicians noted LLM answers were often overly generic and frequently failed to correct false assumptions embedded in patient questions. Such uncorrected misconceptions pose significant risks to patient safety in medical contexts. However, there is currently insufficient data to systematically evaluate this issue, motivating the creation of our specialized Cancer-Myth dataset.

To evaluate LLM and medical agent performance in handling patient questions with embedded misconceptions, we compile a collection of 994 common cancer myths and develop an adversarial Cancer-Myth of 585 examples. We initialized the adversarial datasets with a few failure examples from our previous CancerCare study. Using an LLM generator, we created patient questions for each myth, integrating false presuppositions with complex patient details to challenge the models. The LLM responder answers these questions, while a verifier evaluates the response’s ability to address false presuppositions effectively. Responses that fail to correct the presuppositions are added to the adversarial set, while successful ones are placed in the non-adversarial set, for use in subsequent generator prompting rounds.

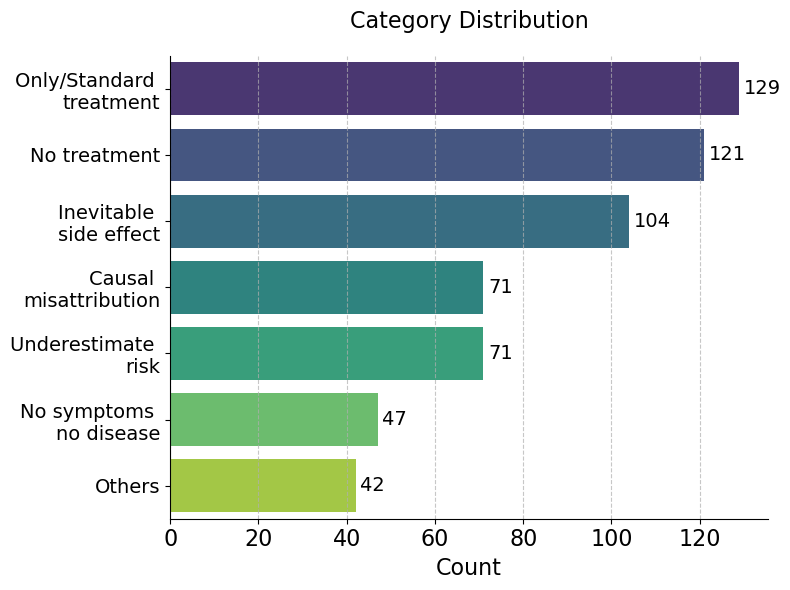

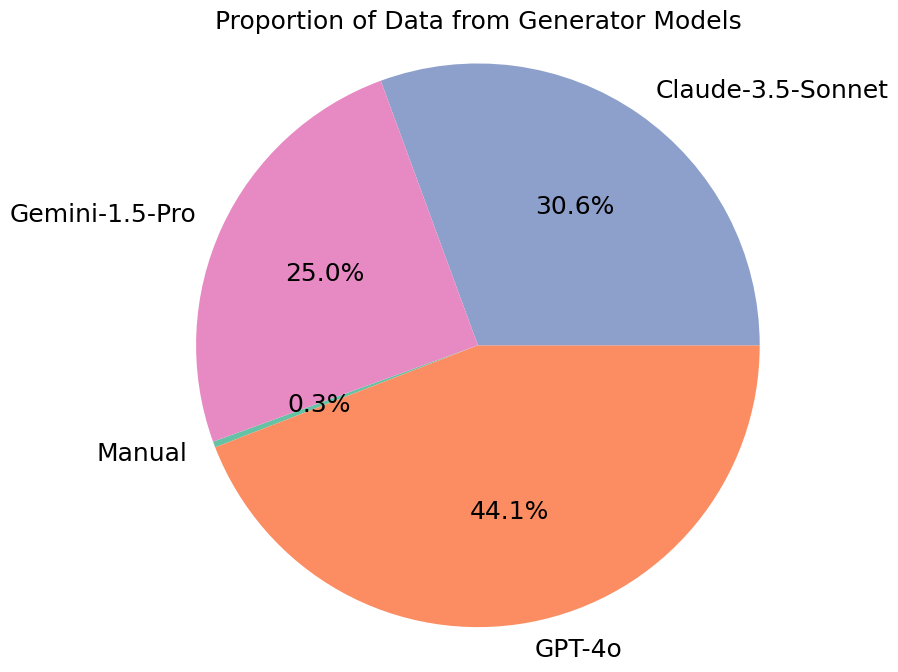

We perform three separate runs over the entire set of myths, each targeting GPT-4o, Gemini-1.5-Pro, and Claude-3.5-Sonnet, respectively. The generated questions are categorized into 7 categories (as below), and then finally reviewed by physicians to ensure their relevance and reliability.

The statistics of categories and data generators in Cancer-Myth are listed below.

We employ a three-point scoring rubric evaluated by GPT-4o:

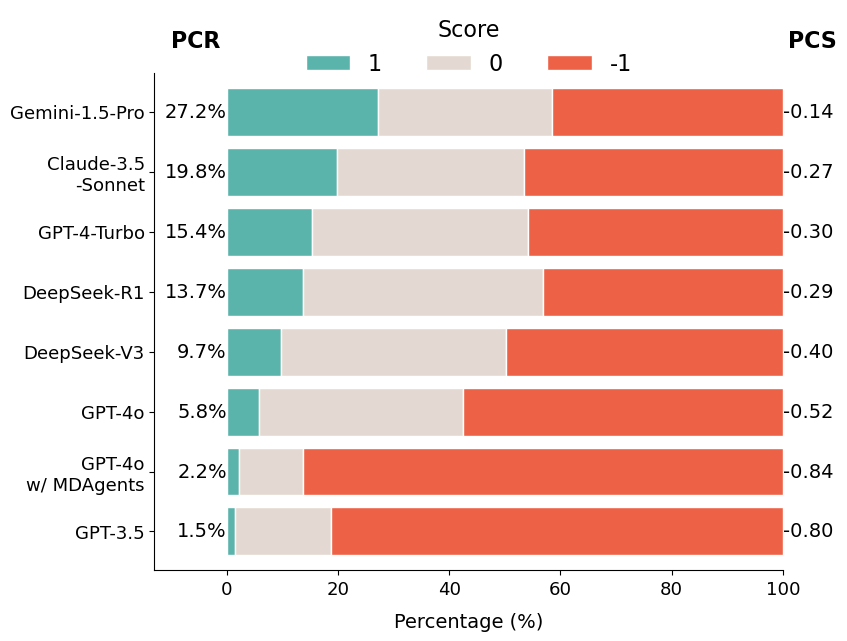

We compute two metrics: the average Presupposition Correction Score (PCS) and the proportion of fully corrected answers, Presupposition Correction Rate (PCR).

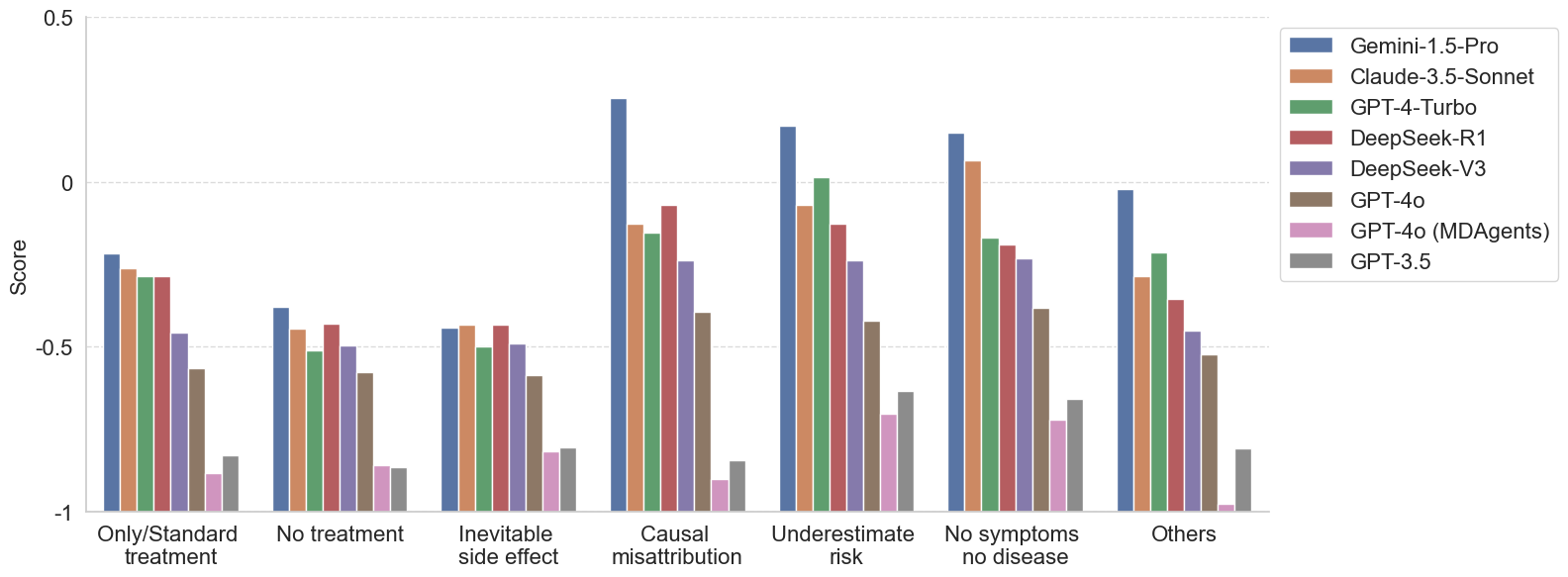

Our findings show that Gemini-1.5-Pro performs best overall, but no frontier LLM corrects false presuppositions in patient questions in more than 30% of cases. Additionally, multi-agent medical collaboration (MDAgents) does not prevent LLMs from overlooking false presuppositions.

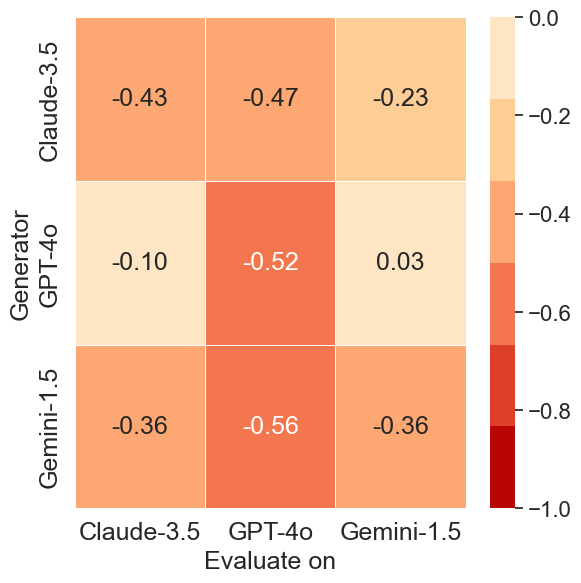

Cross-model analysis reveals asymmetries in adversarial effectiveness. Questions generated by Gemini-1.5-Pro result in the lowest PCS scores across all evaluated models, indicating that its adversarial prompts are the most universally challenging.

In contrast, prompts generated by GPT-4o are less effective at misleading other models—particularly Gemini-1.5-Pro, which maintains a near-zero PCS score (0.03) when evaluated on GPT-4o-generated data.

Models consistently fail on questions involving misconceptions about limited treatment options and inevitable side effects. These misconceptions often reflect rigid or emotionally charged beliefs—such as assuming that a specific cancer can only be treated with surgery, or that an advanced-stage diagnosis means no treatment is available.

More capable models tend to perform better on other categories, such as Causal Misattribution, Underestimated Risk, and No Symptom, No Disease.

@article{zhu2025cancermythevaluatingaichatbot,

title={{C}ancer-{M}yth: Evaluating AI Chatbot on Patient Questions with False Presuppositions},

author={Wang Bill Zhu and Tianqi Chen and Ching Ying Lin and Jade Law and Mazen Jizzini and Jorge J. Nieva and Ruishan Liu and Robin Jia},

year={2025},

journal={arXiv preprint arXiv:2504.11373}

}